Thoughts on Generative Models

Published:

Deep learning has exploded in recent years. Researchers are continually coming up with new and exciting ways to compose deep networks to perform new tasks and learn new things. If I can borrow Nando de Freitas’ analogy, we’re like kids playing with lego blocks, stacking these blocks together, trying to develop novel creations. When we stack these blocks into towers so tall that they become unstable, someone develops a new technique for stacking blocks, allowing us to continue constructing even bigger towers. Occasionally someone invents a new block, and if it’s useful, the rest of us scramble to incorporate this block into our towers.

The result has been a flourishing of new deep learning designs and capabilities, everything from attentional mechanisms to memory to planning and reasoning (just to name a few). Likewise, these networks are becoming more capable, improving on benchmark tasks as well as taking on new ones. While I would like to devote time to all of these developments, I want to use this blog post to explore one exciting area of research, generative models, and offer my vision for where these models may eventually take us.

biological inspiration

As a student of both machine learning and neuroscience, I’m continually trying to understand the ways in which these two domains align and differ, particularly with regard to deep learning and the human brain. With the ultimate goal of building general machine intelligence, my guiding assumption is that by understanding the capabilities of the human brain and having theories as to how it accomplishes these tasks, we can better direct our efforts in deep learning, whether or not we try to emulate the brain’s computational principles. Seeing one solution to a problem tends to make solving that problem easier, even if it only provides you with a better appreciation of the problem itself.

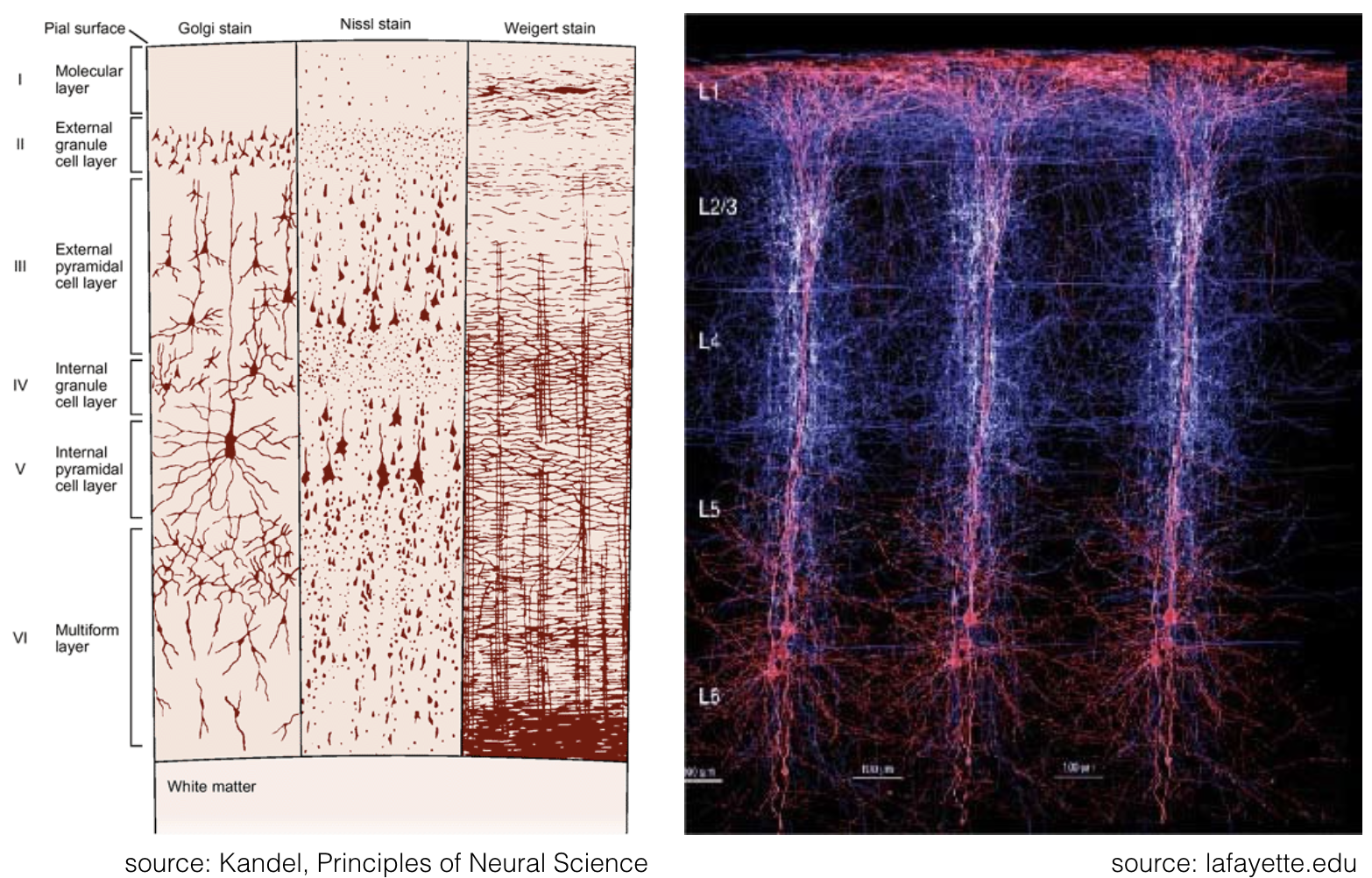

Deep learning takes inspiration from biology. In particular, much of the work in deep feedforward neural networks (especially convolutional neural networks) is loosely based on the sensory processing pathways in the mammalian neocortex. This structure, the folded exterior portion of the brain, is involved in processing sensory signals, storing memories, planning and executing actions, as well as reasoning and abstract thought. With such a wide variety of capabilities, one might expect to find drastic anatomical differences between regions that are specialized for one task over another. However, the neocortex consists of a surprisingly uniform six-layer architecture, with a columnar structure of neurons between layers. It appears as though evolution has found a general purpose computing architecture, capable of performing an array of tasks that are useful for survival.

Neocortical anatomical structure. Left: six-layered architecture of neocortex, differentiated by neuron morphologies. Right: Columnar structure between layers in neocortex. Neurons within a column act as a single computational unit.

Neocortical anatomical structure. Left: six-layered architecture of neocortex, differentiated by neuron morphologies. Right: Columnar structure between layers in neocortex. Neurons within a column act as a single computational unit.

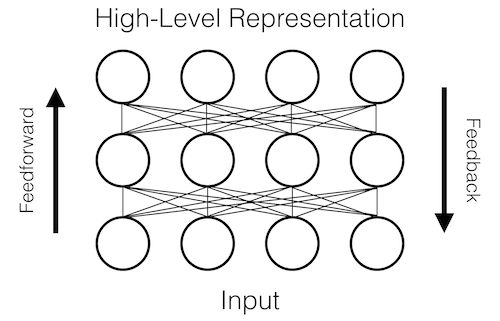

It appears, then, that the specialized functions of the neocortex are instead a function of the connections between neurons, columns, and areas. While we don’t have a detailed understanding of these connections or even the computational principles employed by different areas, we do have some, albeit vague, notion of the computations being performed by the sensory processing regions of the neocortex. For instance, we know that visual processing areas are roughly composed into a computational hierarchy, consisting of two main processing streams that communicate with each other. Neurons progressively further up the hierarchy appear to encode more abstract qualities, going from simple colors, dots, and lines in low level areas up to shapes, objects, and complex motions near the top of the hierarchy. We also know that sensory processing involves both feedforward and feedback signals. Feedforward signals are thought to propagate sensory information for higher level recognition, action, and thought, whereas feedback signals are thought to mediate sensory predictions and attention. Also, we know that the human brain is capable of inferring low level details from high level concepts. If I ask you to describe your childhood bedroom, you would have no problem describing your bed, the walls, and perhaps even some of your toys.

Simple schematic of sensory processing in neocortex. Circles represent computational operations. Computation is performed in a roughly hierarchical manner. The architecture contains both feedforward and feedback pathways.

Simple schematic of sensory processing in neocortex. Circles represent computational operations. Computation is performed in a roughly hierarchical manner. The architecture contains both feedforward and feedback pathways.

The human neocortex is clearly a highly complex system sitting inside the even more complex system of the human brain. The broad picture painted here is only a caricature of its intricacies. I’ve left out many of the biological details, some of which may prove to be important for understanding the computations being performed. But starting from these simplified assumptions, I’ll argue that we may be able to construct a non-biological system that at least approximates the sensory processing computations and capabilities of the human brain. I contend that the sensory processing pathways of the mammalian neocortex are some form of hierarchical generative model that consists of both feedforward and feedback networks.

generative models

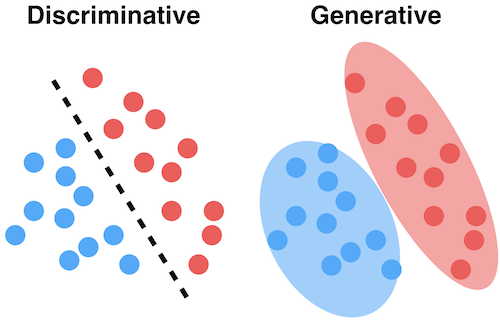

Creating and training a deep neural network involves 1) choosing a network architecture, 2) choosing a learning principle, and 3) choosing some method for optimizing the network according to that principle. Broadly speaking, all machine learning models fall into one of two categories: discriminative and generative. While both models are capable of learning data distributions, they approach the problem in different ways. Discriminative models focus on trying to simply separate different data examples, typically by some specified class. Generative models focus on trying to actually model the underlying data distribution, which can in turn be used to separate examples by class. However, by modeling the underlying data distribution, generative models can also use this distribution to generate data examples. Discriminative models can only discriminate between classes of data examples. Both types of models have pros and cons, which are often dependent on the task at hand, but both have enjoyed many successes.

Discriminative models simply try to discriminate between different classes of data points. Generative models try to model the underlying distribution of the data.

Discriminative models simply try to discriminate between different classes of data points. Generative models try to model the underlying distribution of the data.

Neural networks, especially in this new era of deep learning, have typically taken on a discriminative form. The convolutional neural networks that I train and use for object detection and classification are discriminative. Similar types of models exist for speech recognition, reinforcement learning, and other areas. These models have had many successes in the last few years as benchmarks continue to improve and new tasks are taken on. This has mainly been fueled by better datasets, more computing power, and larger models. However, something that struck me when I first started working in deep learning was an unsettling feeling that these models are not truly learning in the same way in which humans do. Take object classification for example. When training a deep network to classify objects, one typically needs hundreds or thousands of example images to successfully generalize to unseen examples. Of course, one can somewhat get around this through creative forms of data augmentation and transfer learning, but the point remains that these systems need far more examples than humans do to learn new concepts. And mind you, each of those examples needs a corresponding label. Can you imagine if you had to learn a new object by seeing it hundreds of times in different contexts while somebody said its name?

Note that there are actually two problems here. The first is the ability of the model to (quickly) associate some high level representation of the input with some specified class, assimilating this information into the model’s body of knowledge. This is generally studied in the context of low-shot learning, where a model is expected to learn to classify a new class with minimal examples. The second problem is one of developing a high level representation in which the model can easily generalize between different instances of the same concept. This is the problem we are most concerned with. Clearly, these two problems are related: a representation that generalizes better will allow the model to learn new concepts more quickly. I strongly believe that the best representation for easy generalization is one in which the constituent high level parts of the input are disentangled, or in a sense, understood. For object recognition, this would entail having a representation in which objects are recognized irrespective of the specific context in which they are seen; the model understands that objects and contexts are separate concepts (as far as recognition is concerned).

Classifications of three images of ducks by a state-of-the-art convolutional neural network. The left image shows that the network can successfully classify a duck when it is in plain sight in a typical context: in water. The center image shows that once the duck is in a non-typical context, partially occluded by foliage, the network is unable to correctly classify the image. Finally, the right image shows the ducks in an even more obscure context, highly occluded. Again, the network is unable to correctly classify the ducks.

Classifications of three images of ducks by a state-of-the-art convolutional neural network. The left image shows that the network can successfully classify a duck when it is in plain sight in a typical context: in water. The center image shows that once the duck is in a non-typical context, partially occluded by foliage, the network is unable to correctly classify the image. Finally, the right image shows the ducks in an even more obscure context, highly occluded. Again, the network is unable to correctly classify the ducks.

Discriminative models have a difficult time encapsulating an understanding of the examples themselves because they do not model the data generation process. Unless explicitly trained to separate out different concepts, these networks tend to overfit to the data with which they are trained. They simply look for patterns in the data that are helpful for the task they are trained to perform. We can argue whether this amounts to ‘understanding,’ but bear with me for the time being. A discriminative model succeeds when it has access to copious amounts of data (seeing most of the possible patterns from the data distribution) and the capacity to store this information. However, when presented with new data examples or familiar examples in new contexts, these models are often unable to generalize well. The object domain we tend to use in the Caltech vision lab is birds. Imagine a network that has only ever seen images of ducks in or near water. During training, it learns that water is a key feature for classifying ducks, and the network’s weights adjust so as to be selective for water patterns within images when classifying ducks. This is, in fact, what actually happens when training a discriminative model for this task. However, water does not determine whether or not a duck is a duck; it’s merely a helpful contextual clue. When you or I see a duck in a new context, there may be an initial confusion, but we often have no problem generalizing objects to entirely new contexts.

So how do we fix this problem? How do we move beyond current deep learning techniques to arrive at some form of deep understanding? The answer, I’m convinced, is by building a generative model. Generative models cannot as easily get away with simply finding useful patterns in the data. They are required to build a model of how the data is generated. Depending on the expressive power of the model, this implies developing some level of understanding of the underlying data generation mechanism. When trained in an appropriate way, such a model could, in theory, disentangle the underlying factors that influence the world around us. In the setting of our duck example, a generative model could potentially understand that there are objects called ducks that have bills, wings, eyes, etc. and that such objects exist independent of their context. In other words, a duck is what makes a duck a duck. With this level of understanding, we may be justified in hoping that the model will more easily generalize to new examples and classes through transfer learning.

In the rest of this blog post, I will look at one particular generative model, the variational auto-encoder. After, I’ll point to a few recent papers, their possible connections to neuroscience, and where I think things are headed.

variational autoencoders

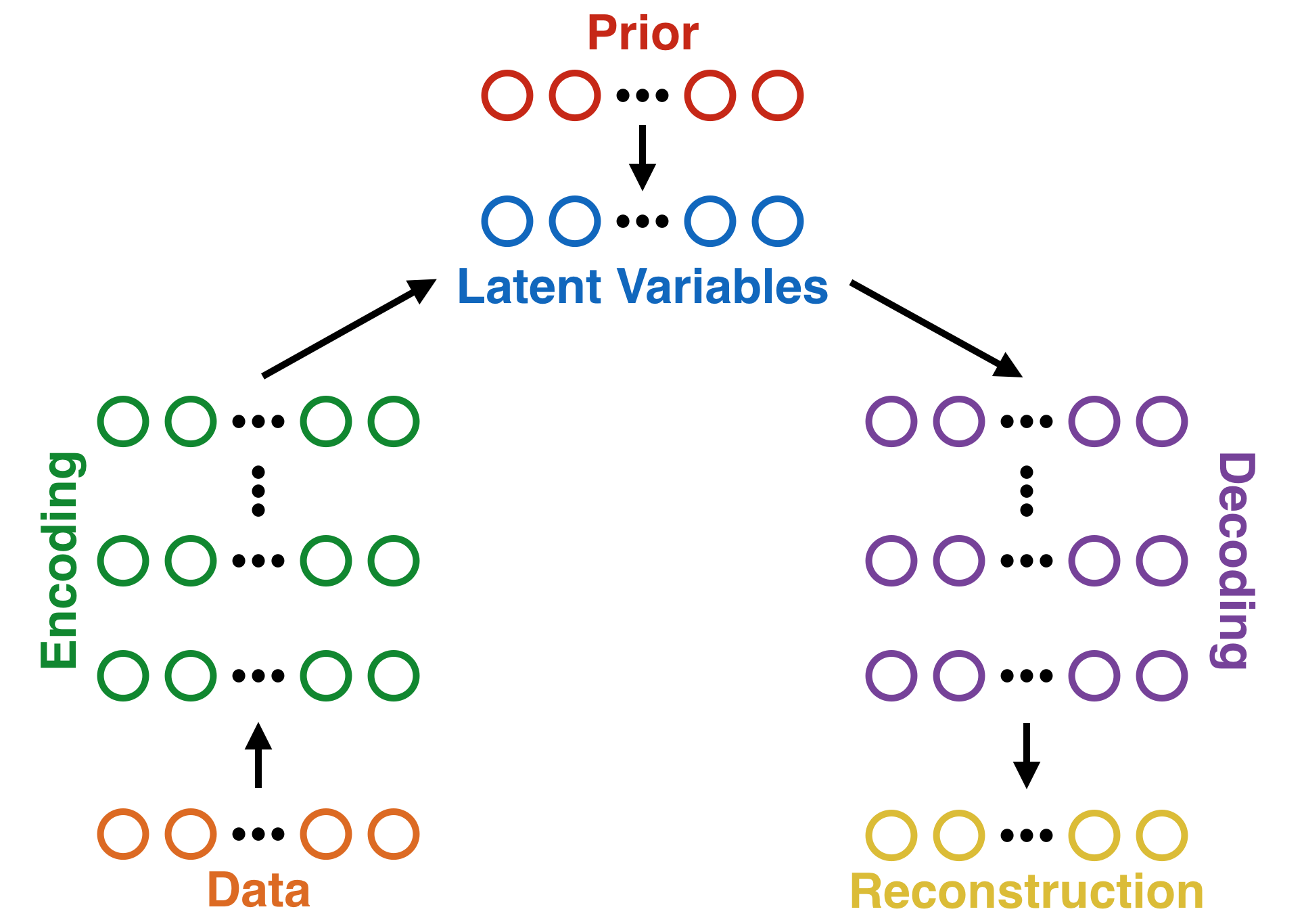

Variational auto-encoders are a form of latent variable generative model. Unlike transformation models (e.g. generative adversarial networks), which convert random noise into data examples, or autoregressive models (e.g. Pixel RNN/CNN, WaveNet), which condition the generation of parts of an example on the previously generated parts, latent variable models have a greater potential for more levels of abstraction. Variational auto-encoders were independently developed by Kingma and Welling and Rezende, Mohamed, and Wierstra in 2013. I will spare you the details of the derivation and will instead point you toward the two original papers and this excellent tutorial. The overall model is composed as follows. We have input data $\mathbf{x}$ and a set of latent variables $\mathbf{z}$, which have a prior probability distribution $p(\mathbf{z})$. We have a recognition model $q(\mathbf{z} | \mathbf{x})$, an encoding neural network that approximates the true posterior of the latent variables. We also have a generative model $p(\mathbf{x} | \mathbf{z})$, which is a decoding neural network that generates data by taking the latent variables as input. For tractability issues, the true posterior is assumed to be a multi-variate Gaussian with diagonal covariance. Similarly, the prior is set to be $\mathcal{N}(\mathbf{z}; 0, \mathbf{I})$. The model is train by maximizing the variational lower bound on the log likelihood, which for an example $\mathbf{x}^{(i)}$ is written as

\[\mathcal{L} (\mathbf{x}^{(i)}) = \mathbb{E}_{q(\mathbf{z}|\mathbf{x}^{(i)})}\left[\log p(\mathbf{x}^{(i)}|\mathbf{z})\right] - D_{KL}\left(q(\mathbf{z}|\mathbf{x}^{(i)})||p(\mathbf{z})\right).\]When adding the assumptions on the prior and posterior, this becomes

\[\mathcal{L} (\mathbf{x}^{(i)}) = \frac{1}{L} \sum_{\ell = 1}^{L} \log p(\mathbf{x}^{(i)}|\mathbf{z}^{(i,\ell)}) - \frac{1}{2} \sum_{j=1}^J \left( 1 + \log((\sigma_j^{(i)})^2) - (\mu_j^{(i)})^2 - (\sigma_j^{(i)})^2 \right),\] \[\text{where } \mathbf{z}^{(i,\ell)} = \mu^{(i)} + \sigma^{(i)} \odot \epsilon^{(\ell)} \text{ and } \epsilon^{(\ell)} \sim \mathcal{N}(0,\mathbf{I}).\]$L$ is the number of samples drawn from the approximate posterior for each example, and $J$ is the number of latent variables (the length of $\mathbf{z}$). Let’s deconstruct this equation. The first term is the expectation of the generative model at the input according to the approximate posterior. This is simply a reconstruction term: how well the model does at reconstructing the input it is given. The second term is the KL divergence between the approximate posterior and the prior. This regularizes the latent variables toward the prior. If a particular latent variable is unnecessary for reconstructing the input, its value is set toward the prior of a Gaussian with zero mean and unit variance.

Schematic of a one level VAE.

Schematic of a one level VAE.

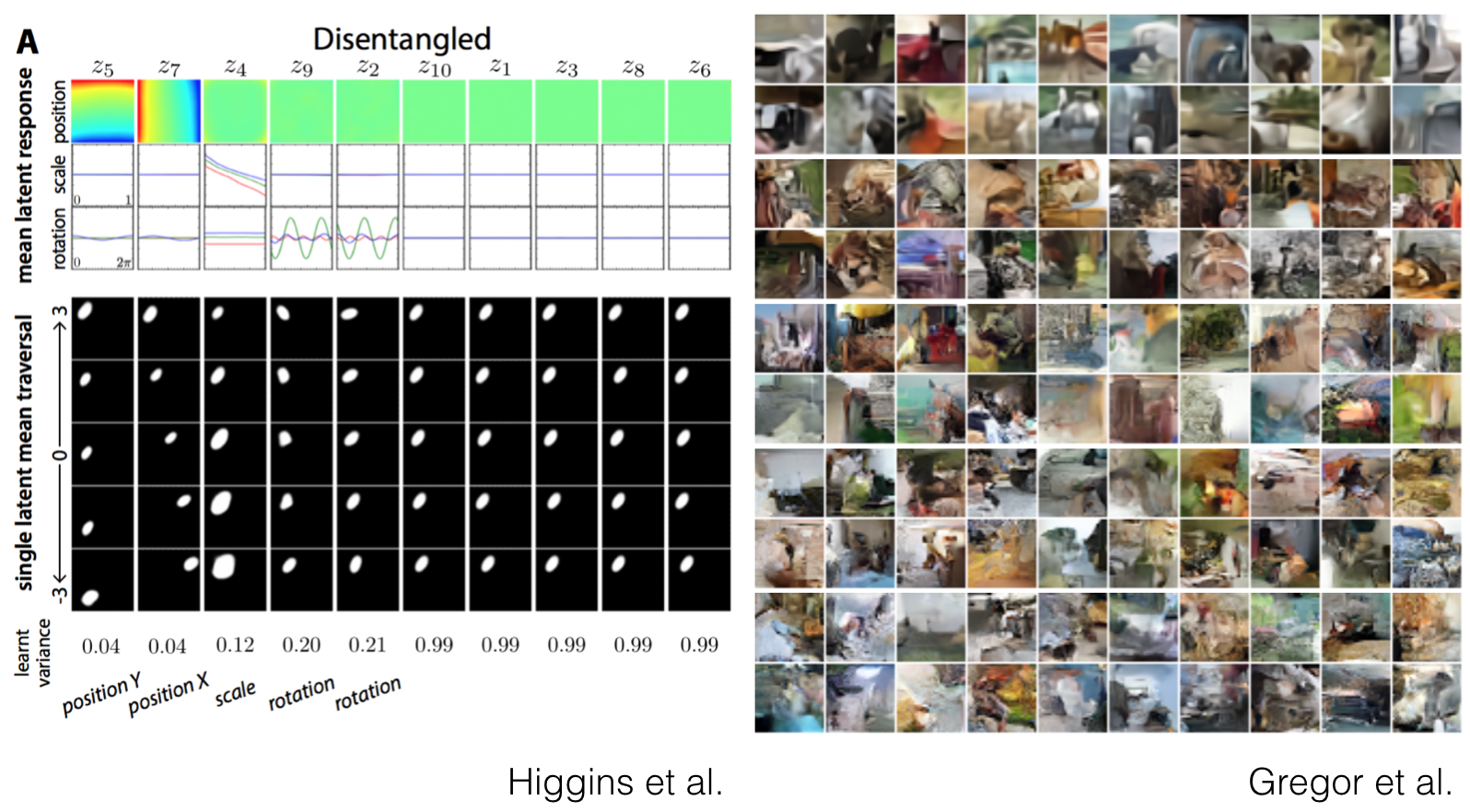

The independence assumption on the latent variables has some interesting consequences. By weighting either one of the terms in the VAE equation, we can increase or decrease the amount of regularization during training. Decreasing the weight on the regularization term results in latent variables that are free to be whatever value is best for reconstruction, approaching maximum likelihood learning as we decrease the weight toward zero. Increasing the weighting, however, results in latent variables that are turned off, with the remaining latent variables being strongly encouraged toward independence. Recently, Higgins et al. showed that by increasing the weighting on the regularization term, one can begin to disentangle the factors of variation underlying the data distribution. For instance, they learn to separate translation, rotation, and scale on a simple 2D shapes dataset. They also learn the different factors in Atari games, including score, health, and position (see here). While they demonstrate this phenomenon on only a handful of toy examples, the results offer an encouraging path forward toward deep understanding. This suggests that with a powerful enough model, one might be able to uncover the factors of variation underlying the natural world. This would be a momentous step beyond simply matching patterns. In this way, a machine could actually understand the world we live in, more easily enabling it to perform a wide array of higher level cognitive abilities, including memory, planning, and reasoning.

Increasing the weight of the independence prior on the latent variables in VAEs induces `conceptual compression,’ thereby disentangling the underlying factors of variation generating the data. Left: Disentangled latent variables on a 2D shapes dataset. The first five latent variables encode position, scale, and rotation of a 2D shape. The other latent variables are set to the prior. Right: The higher images are produced by a VAE with a larger relative amount of regularization and appear to have more visually cohesive global structure. The model for the lower images has less regularization and focuses too much on fine details.

Increasing the weight of the independence prior on the latent variables in VAEs induces `conceptual compression,’ thereby disentangling the underlying factors of variation generating the data. Left: Disentangled latent variables on a 2D shapes dataset. The first five latent variables encode position, scale, and rotation of a 2D shape. The other latent variables are set to the prior. Right: The higher images are produced by a VAE with a larger relative amount of regularization and appear to have more visually cohesive global structure. The model for the lower images has less regularization and focuses too much on fine details.

Another hint of the potential of such a strategy is seen in a recent paper by Gregor et al., in which they train a VAE with recurrent encoding and decoding functions to progressively generate natural images. Similarly to Higgins et al., they investigate weighting the terms in the variational lower bound. What they notice is that models with higher amounts of regularization tend to generate images with more coherent global structure. Admittedly, these images are still quite blurry, which is likely the result of a number of things (a simplified approximate posterior distribution form, no attentional mechanism, etc.), but the fact remains that these models appear to have internalized the concepts of certain objects better than their less regularized counterparts. While these results are slightly more difficult to interpret, they at least seem to agree with the work of Higgins et al. This offers hope that learning latent variable models of a more expressive form may allow us to teach a machine to understand the natural world.

relation to sensory processing in the neocortex

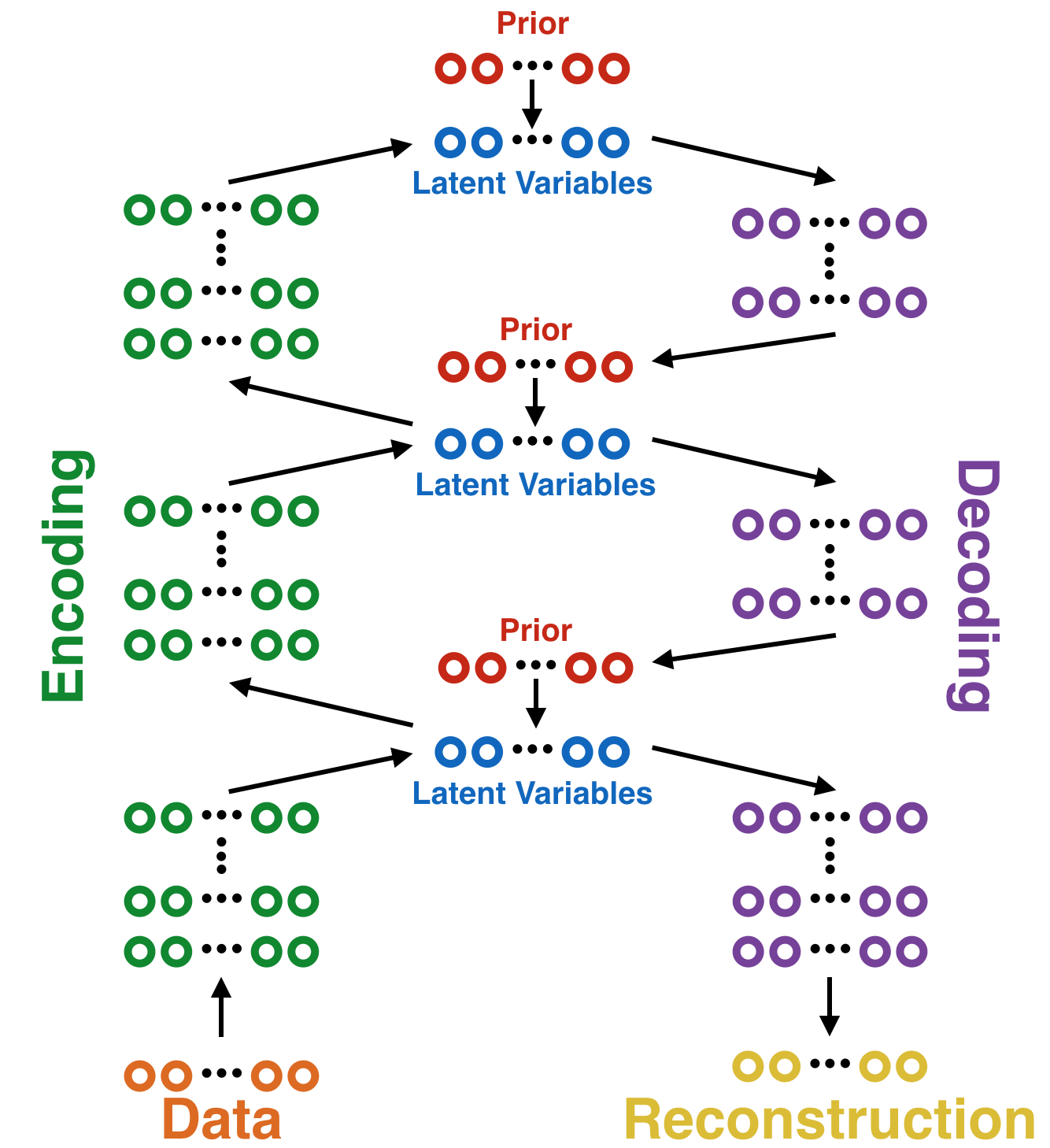

As discussed earlier, the sensory processing pathways in the neocortex appear to be some form of hierarchical generative model consisting of feedforward and feedback pathways. In this regard, VAEs may be performing a crude approximation of neocortical processing. Feedforward processing in the neocortex would thus correspond to the recognition network, encoding stimuli by selecting out progressively more abstract features from the input. Similarly, feedback processing would correspond to the generative network, whereby activity in high level areas is used to generate predictions in low level areas. However, as we compare neocortical sensory processing with VAEs, one immediate difference we see is that VAEs are typically formulated with a single level of latent variables. Neocortex, on the other hand, could consist of many such stages. It’s possible that the stochastic latent variables correspond to the neuron activities themselves, with the recognition network corresponding to dendritic processing. The generative network may likewise correspond to dendritic processing in the feedback pathway.

Schematic of a possible three level VAE.

Schematic of a possible three level VAE.

With multiple levels of latent variables, during the feedforward pass, one would propagate inputs through the entire network, generating activations (using the approximate posterior) for each of the latent variables at each level. At the top of the hierarchy, one might insist on using an independence prior like that used in the one level VAE. During the feedback pass, we generate predictions of the lower level activations using the higher level activations. We can view this as a top down prior on the activations at each processing level, acting as a generalized version of the independence prior used in the one level VAE. This overall processing scheme is not a new idea; it is referred to as hierarchical Bayesian inference, and was proposed as a neocortical sensory processing technique by Lee and Mumford in 2003. While there is still much debate as to how neocortex actually learns and processes sensory stimuli, this approach at least seems plausible.

In the deep learning community, this approach does not currently seem to be an area of intense interest. Furthermore, it is difficult to train latent variable models of this form. However, recent work by Yoshua Bengio and his colleagues proposes a biologically plausible method for learning, called TargetProp, that appears to agree with this framework. The basic idea is as follows: rather than propagating error derivatives throughout the entire network as in backpropagation (which many consider to be biologically implausible), one constructs feedforward and feedback networks, with the feedback network generating activation predictions (targets) for the feedforward network. Learning occurs in both networks by trying to match the predictions to the actual activations. In the hierarchical Bayesian framework, predictions can be considered top down priors, while bottom up activities are samples from the approximate posterior. The goal of learning is to try to match these two activities as closely as possible. In general terms, we want to learn a model of the world by continuously predicting a prior on what will happen next, then updating our predictions if they don’t align with reality’s posterior.

conclusion

Through building models that are more closely inspired by the brain, we can hope to 1) gain a better understanding of our brains and how we learn and process information as well as 2) build machines that are increasingly capable of understanding the world that we live in. What I have presented here is in the very early stages. It is a departure from many of the current techniques for deep learning that are used in practice, which are largely discriminative models. However, there is a great deal of promise behind this technique in moving us toward models that actually understand aspects of the world. I feel this is a vital condition for creating machines that are capable of interacting with us and their environments in meaningful ways. Such models could eventually facilitate machines that are able to plan, reason, and perform other types of abstract thought that we typically associate with human intelligence.

Be sure to check out recent work on Ladder Variational Autoencoders as well as Gerben van den Broeke’s excellent master’s thesis. These works agree nicely with the further directions outlined here, and go into detail about how to incorporate more biological inspiration to arrive at more powerful models and algorithms.